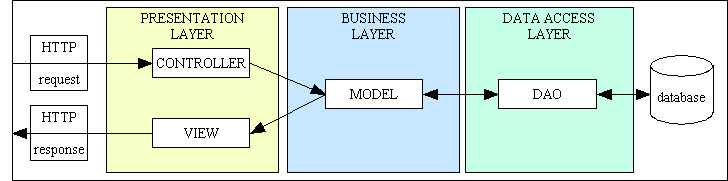

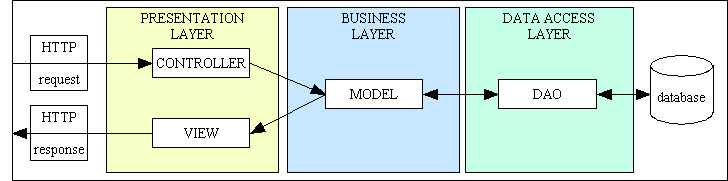

Figure 1 - A simplified view of my application structure

The purpose of this document is to answer my critics who insist on claiming that my methods of implementing the principles of OOP, as shown in my RADICORE framework, are inferior, wrong, impure and "not proper OOP" despite the fact that I can prove that my results are superior to theirs. They claim that I am not following "best practices" when what they actually mean is that I am not following the same set of practices as them. By constantly promoting their questionable versions of "best practices" and miscomprehension of basic programming principles, by constantly criticising me for having the audacity to voice an opinion which is different from theirs, by constantly promoting pedantry and dogmatism over pragmatism, these people are steering the next generation of computer programmers down the wrong path. This will, in my humble opinion, prevent them from creating efficient and cost-effective software, so can have nothing but a detrimental effect on the whole of our industry.

I did not create my RADICORE framework in a single moment of inspiration, it evolved over a period of several decades after building one database application after another while being part of different teams in different organisations using several different programming paradigms. I was exposed to several different variations of "best practice", but after concentrating on those ideas which produced the best results with the fewest problems I graduated from creating libraries of reusable code to creating frameworks which increased the productivity of all the programming teams which I led. You can read the full story in Evolution of the RADICORE framework.

All of today's novice programmers are taught that object-oriented programming languages are better than non-OO languages, but is this true? While they are different (as explained in What is the difference between Procedural and OO programming?) their use does not guarantee that the results will be automatically superior. Only someone who has spent time developing software in a non-OO language and then switched to developing the same type of software in an OO language is actually qualified to make that kind of judgement. I spent the first 20 years of my software career in the development of database applications using non-OO languages such as COBOL and UNIFACE, and then the last 20 years developing the same type of application using PHP with its OO capabilities. I know from personal experience that simply using a language that has certain capabilities does not guarantee that you will automatically produce good software, it is how you make use of those capabilities which counts. It is possible for a crap programmer to write crap code in any language just as it is possible for a good programmer to write good code in the same languages. It is not what you use that counts, it is the way that you use it.

It is simply because that I have been developing database applications for 40 years using different languages and different paradigms that I feel qualified in judging if one one language is better than another. Because each new language has new capabilities it should be possible to use these new capabilities to be more productive. If this is not the case then you must be doing something wrong.

Switching from one programming paradigm and/or language to another should not be undertaken lightly. There should be some discernible benefits otherwise you will be taking a step backwards. In some cases your employer may want to switch to a new language as the current one may not provide the facilities that make your applications more attractive to customers. This is why in the 1990s my employer switched from COBOL, with its simple green screen technology, to UNIFACE which provided a GUI which supported additional controls such radio buttons, checkboxes and dropdown lists as well as being able to access quite easily a variety of relational databases. I switched from UNIFACE to PHP in 2002 after I saw that the future lay in web-based applications, and a particular project convinced me that UNIFACE was too clunky to be of practical use. I looked for a replacement language and chose PHP as it was better suited to the development of web-based applications. I was also very impressed with how easy it was to produce results with simple code.

Using a language with OO capabilities is no good unless you learn how to make proper use of those capabilities. I had heard about this new paradigm called Object-Oriented Programming, but I didn't know exactly what it was nor why it was supposed to be better. I read various descriptions, as shown in What is Object Oriented Programming (OOP)?, but none of them seemed to hit the nail on the head as much as the following:

Object Oriented Programming is programming which is oriented around objects, thus taking advantage of Encapsulation, Inheritance and Polymorphism to increase code reuse and decrease code maintenance.

Using a new paradigm just because it is different is one thing, but this new object-oriented approach was claimed to be better because it supposedly provided benefits such as:

The power of object-oriented systems lies in their promise of code reuse which will increase productivity, reduce costs and improve software quality.

OOP is easier to learn for those new to computer programming than previous approaches, and its approach is often simpler to develop and to maintain, lending itself to more direct analysis, coding, and understanding of complex situations and procedures than other programming methods.

So much for the promises, but what about the reality? Could OOP live up to all this hype? How easy would it be to write programs which are Object Oriented? Would I actually be able to achieve the objectives which were promised?

I did not go on any training courses, instead I read the PHP manual, bought some books, and found some articles on the internet which other developers had written as an example of how things could be done. I noticed straight away that each author's ideas were completely different from everybody else's, so I experimented on my home PC to find the best solutions for me. My objective was to produce as many reusable components as possible, thus being able to achieve results by having to write less code. This I have done, as shown by the Levels of Reusability which are provided in my framework. However, my critics (of whom there are many) insist that my results are invalid simply because I am not following their interpretations of "best practices". I am results-oriented, not rules-oriented, and my customers pay me for the results I achieve, not the rules which I follow. I refuse to follow their rules for the simple reason that it would degrade the quality of my work.

Here is a definition which I found for "best practices":

Best practices are a set of guidelines, ethics, or ideas that represent the most efficient or prudent course of action in a given business situation.

Best practices may be established by authorities, such as regulators, self-regulatory organizations (SROs), or other governing bodies, or they may be internally decreed by a company's management team.

A best practice is a method or technique that has been generally accepted as superior to any alternatives because it produces results that are superior to those achieved by other means or because it has become a standard way of doing things, e.g., a standard way of complying with legal or ethical requirements.

Notice here that it uses the term guidelines and not rules which are set in concrete and must be followed to the letter by everybody. Note also the phrase represent the most efficient or prudent course of action in a given business situation - if my business situation is different to yours then why should I follow your practices? I also do not recognise any governing bodies who have the authority to dictate how software should be written. There is no such thing as a "one size fits all" style as each team or individual is free to use whatever style fits them best. There are different guidelines for different languages (such as strictly typed vs. dynamically typed). There are different guidelines for different types of application (database applications vs. non-database applications). Different groups of programmers have their own ideas on what is best for them, so I choose to follow those ideas which are best for me and the type of applications which I write, ideas which have been tried and tested over several decades using several programming languages.

It was not until several years after I had starting publishing what I had achieved that some so-called "experts" in the field of OOP informed me that everything I was doing was wrong, and because of that my work was totally useless. When they said "wrong" what they actually meant was "different from what they had been taught" which is not the same thing. What they had been taught, and which is still being taught today, is that in order to be a "proper" OO programmer you must follow the following sets of rules:

I have documented criticisms of some of these rules in the following:

Basically I look at the method which I have chosen and compare the amount of reusable code which it produces with the amount which could be produced by the "officially approved" practices, and in all cases I see that my personal method produces the most reusable code, therefore it *MUST* be better. It should be obvious that the more reusable code you have then the less code you have to write, and there is nothing less than no code at all. With my framework it is possible, as shown in this video, to create a database table, then create the tasks to maintain the contents of that table without writing any code at all - no PHP code, no HTML code, no SQL code - as the framework automatically performs all the standard processing in its collection of reusable modules. The only thing left for the application developer to do is insert the code for any business rules into the relevant "hook" methods.

My reply to these critics can be summed up as follows:

If I were to follow the same methods as you then my results would be no better than yours, and I'm afraid that your results are simply not good enough. The only way to become better is to innovate, not imitate, and the first step in innovation is to try something different, to throw out the old rules and start from an unbiased perspective. Progress is not made by doing the same thing in the same way, it requires a new way, a different way.

If my development methodology and practices allow me to be twice as productive as you, then that must surely mean that my practices are better than yours. If my practices are better then how can you possibly say that your practices are best?

Any methodology which helps a programmer to achieve high rates of productivity should be considered as a good methodology. This can be done by providing large amounts of reusable code, either in the form of libraries, frameworks or tools, which the programmer can utilise without having to take the time to write his own copy. It should be well understood that the less code you have to write then the less code you have to test and the less time it will take. Being able to produce cost-effective software in less time than your competitors means that your Time to Market (TTM) is quicker and, as time is money, your costs will be lower. Both of these factors will be appreciated by your paying customers much more than the "purity" of your development methodology.

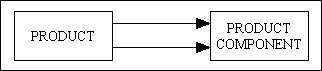

So how much reusable code do I have? Take a look at a simplified diagram of my application structure in Figure 1:

Figure 1 - A simplified view of my application structure

As you should be able to see this is a combination of the 3-Tier Architecture and the Model-View-Controller (MVC) Design Pattern. A more detailed diagram, with explanations, can be found in RADICORE - A Development Infrastructure for PHP.

The ability to write code to create classes and objects would be totally wasted unless you create classes for the right things. It would appear that my original choices were correct because, quite by accident, I created objects which matched the categories identified in the article How to write testable code and When to inject: the distinction between newables and injectables:

| Entities | An object whose job is to hold state and associated behavior. The state (data) can be persisted to and retrieved from a database. Examples of this might be Account, Product or User. In my framework each database table has its own Model class. |

| Services | An object which performs an operation. It encapsulates an activity but has no encapsulated state (that is, it is stateless). Examples of Services could include a parser, an authenticator, a validator or a transformer (such as transforming raw data into HTML, CSV or PDF). In my framework all Controllers, Views and DAOs are services. |

| Value objects | An immutable object whose responsibility is mainly holding state but may have some behavior. Examples of Value Objects might be Color, Temperature, Price and Size. PHP does not support value objects, so I do not use them. I have written more on the topic in Value objects are worthless. |

The components in the RADICORE framework fall into the following categories:

It should also be noted that:

This arrangement helps me to provide these levels of reusability. This means that after creating a new table in my database I can do the following simply by pressing buttons on a screen:

Note that the whole procedure can be completed in just 5 minutes without having to write a single line of code - no PHP, no HTML, no SQL. If you cannot match THAT level of productivity then any criticisms that my methods are wrong and will always fall on deaf ears.

Note that some people claim that my framework can only be used for simple CRUD applications. These people have obviously not studied my full list of Transaction Patterns which provides over 40 different patterns which cover different combinations of structure and behaviour. I have used these patterns to produce a large ERP application which currently contains over 4,000 user transactions which service over 400 database tables in over 20 subsystems. While some of these user transactions are quite simple there are plenty of others which are quite complex.

I have often been told that you shouldn't be doing it that way, you should be doing it this way

. When I look at what they are proposing and see immediately that it would have a negative impact on either the elegance of my code and/or my levels of productivity then I simply refuse to follow their "advice". I have yet to see any suggestion which would improve my code, but I have seen many that would degrade it beyond all recognition. Rather than saying "let's run it up the flagpole and see who salutes it" these ideas fall into the category of "let's drop it in the toilet bowl and see who flushes it".

What is the cause of these bad ideas? Ever since I have been perusing the internet reading other people's ideas and posting ideas of my own on various forums I have come to the conclusion that there are two contribution factors - poor communication and poor comprehension.

These problems are exacerbated by peculiarities in the English language:

This means that when being read by a clueless newbie the meaning of a sentence can be ambiguous instead of exact, and if there is any room for ambiguity then some people will always attach the wrong meaning to a word and therefore end up with a corrupted meaning for the sentence which contains that word. If that same person uses different words to explain that corrupted meaning to a third party then it can become even more corrupt. The more people through whom the message passes the more likely it is that it gets more and more corrupted, just like it does in the children's game called Chinese Whispers.

There are also some other problems which can add to the confusion:

By attempting to apply the rules of one type of software to a different and incompatible type of software leads to claims such as OOP is not suitable for database applications. This shows a complete lack of understanding by the claimant - he doesn't know how databases work, and he doesn't know how to apply the mechanics of OOP to work with databases. By "mechanics" I mean nothing more than how to use the principles of encapsulation, inheritance and polymorphism in order to write cost-effective software. I built my own software while being totally unaware of these third-party rules, and my software has not suffered because of it. On the contrary, my development framework has flourished because of it as it has allowed me to become more productive than the users of any other framework, so that just shows how valuable (NOT!) these third-party rules are.

There are two ways in which an idea can be implemented - intelligently or indiscriminately.

Those who apply an idea or principle indiscriminately, who apply it in inappropriate circumstances, or who don't know when to stop applying it, are announcing to the world that they do not have the brain power to make an informed decision. They simply do it without thinking as they assume that someone else, namely the person who invented that principle, has already done all the necessary thinking for them. This leads to a legion of Cargo Cult programmers, copycats, code monkeys and buzzword programmers who are incapable of generating an original thought.

This problem also manifests itself with the inappropriate use of design patterns.

Listed below are some of the stupid ideas, or misinterpretations of good ideas, which I believe can result in crap code:

I disagree. OO programming and Procedural programming are exactly the same except the former supports encapsulation, inheritance and polymorphism while the latter does not. They are both concerned with writing imperative statements which are executed in a linear fashion with the only difference being the way in which code can be packaged - one uses plain functions while the other uses classes and objects. Further thoughts can be found at:

I disagree. A person called Yegor Bugayenko made the following dubious statements in various blog articles:

Inheritance is bad because it is a procedural technique for code reuse. .... That's why it doesn't fit into object-oriented programming.

I disagree. Inheritance does not exist in procedural languages, therefore it cannot be "a procedural technique for code reuse". Inheritance is one of the 3 pillars of OOP, so to claim that "it doesn't fit into object-oriented programming" is ridiculous beyond words.

Code is procedural when it is all about how the goal should be achieved instead of what the goal is.

I disagree. You are confusing imperative programming, which identifies the steps needed to perform a function, with declarative programming, which expresses the rules that a function should follow without defining the steps which implement those rules.

A method is procedural if the name is centered around a verb, but OO if it is centered around a noun.

I disagree. Classes in the business/domain layer, which represent the Model in MVC, represent entities, and entities are nouns, Methods represent the operations that can be performed on that entity, and operations (functions which can be performed) are always verbs.

Having used both procedural and OO languages for nearly 20 years apiece I have observed the following:

Object Oriented programming is exactly the same as Procedural programming except for the addition of encapsulation, inheritance and polymorphism. They are both designed around the idea of writing imperative statements which are executed in a linear fashion. The commands are the same, it is only the way they are packaged which is different. While both allow the developer to write modular instead of monolithic programs, OOP provides the opportunity to write better modules.

In his article All evidence points to OOP being bullshit John Barker says the following:

Procedural programming languages are designed around the idea of enumerating the steps required to complete a task. OOP languages are the same in that they are imperative - they are still essentially about giving the computer a sequence of commands to execute. What OOP introduces are abstractions that attempt to improve code sharing and security. In many ways it is still essentially procedural code.

By "abstractions" he means the ability to create concrete classes which can inherit (share) code which is defined in abstract classes.

In his paper Encapsulation as a First Principle of Object-Oriented Design (PDF) Scott L. Bain wrote the following:

Object Orientation (OO) addresses as its primary concern those things which influence the rate of success for the developer or team of developers: how easy is it to understand and implement a design, how extensible (and understandable) an existing code set is, how much pain one has to go through to find and fix a bug, add a new feature, change an existing feature, and so forth. Beyond simple "buzzword compliance", most end users and stakeholders are not concerned with whether or not a system is designed in an OO language or using good OO techniques. They are concerned with the end result of the process - it is the development team that enjoys the direct benefits that come from using OO.

This should not surprise us, since OO is routed in those best-practice principles that arose from the wise dons of procedural programming. The three pillars of "good code", namely strong cohesion, loose coupling and the elimination of redundancies, were not discovered by the inventors of OO, but were rather inherited by them (no pun intended).

The notion of abstraction has been a confusing concept for a long time, not just for me but for many others as well. After performing a search on the interweb thingy I found many descriptions, but nothing good enough to be called a "definition" which could explain the concept to a novice in unambiguous terms. That was until I came across Designing Reusable Classes which was published in 1988 by Ralph Johnson and Brian Foote in which they described abstraction as the process of separating the abstract from the concrete, the general from the specific

. This is performed by examining a group of objects looking for both similarities and differences where the similarities are common to all members of that group while the differences are unique to individual members. If there are common protocols (operations or methods) then class inheritance allows these methods to be placed an abstract superclass so that they can be inherited and therefore shared by every concrete subclass which then need only contain the differences.

In a separate article called The meaning of "abstraction" I reveal how it is actually both a verb (process) and a noun (entity) which produces a result in two forms:

I have seen many descriptions of encapsulation which insist on including data hiding which, in my humble opinion, is derived from a misunderstanding of the term implementation hiding. Encapsulation, when originally conceived, did not specify data hiding as a requirement, and when PHP4 was released with its OO capabilities it not not include property and method visibility, so I never used it. Even though it was added in later versions of PHP I still don't use it. Why not? Simply because my code works without it, and adding it would take time and effort with absolutely no benefit. I have always thought that the idea of data hiding seems nonsensical - if the data is hidden then how are you supposed to put it in and get it out? Who are you supposed to be hiding it from, and why?

The true definition of encapsulation is as described in What is Encapsulation? as follows:

In object-oriented computer programming languages, the notion of encapsulation (or OOP Encapsulation) refers to the bundling of data, along with the methods that operate on that data, into a single unit. Many programming languages use encapsulation frequently in the form of classes. A class is a program-code-template that allows developers to create an object that has both variables (data) and behaviors (functions or methods). A class is an example of encapsulation in computer science in that it consists of data and methods that have been bundled into a single unit.

Encapsulation may also refer to a mechanism of restricting the direct access to some components of an object

Note that the second paragraph includes the word may, so the ideas of object visibility are optional, not a requirement.

My own personal definition of encapsulation is concise and precise:

The act of placing data and the operations that perform on that data in the same class. The class then becomes the 'capsule' or container for the data and operations. This binds together the data and functions that manipulate the data.

Note that this definition states that ALL the data and ALL the methods which operate on that data should be defined in the SAME class. Some clueless newbies out there use a warped definition of the Single Responsibility Principle to say that a class should not have more than a certain number of properties and methods where that number differs depending on whose definition you are reading.

This has a fairly simple definition:

The reuse of base classes (superclasses) to form derived classes (subclasses). Methods and properties defined in the superclass are automatically shared by any subclass. A subclass may override any of the methods in the superclass, or may introduce new methods of its own.

Note that I am referring to implementation inheritance (which uses the "extends" keyword) and not interface inheritance (which uses the "implements" keyword).

While it is possible to create deep inheritance hierarchies this is a practice which should be avoided due to the problems noted in Issues and Alternatives. People who encounter problems say that the fault lies with the concept of inheritance itself when in fact it lies with their implementation. This is why they Favour Composition over Inheritance. In Object Composition vs. Inheritance I found the following statements:

Most designers overuse inheritance, resulting in large inheritance hierarchies that can become hard to deal with. Object composition is a different method of reusing functionality. Objects are composed to achieve more complex functionality. The disadvantage of object composition is that the behavior of the system may be harder to understand just by looking at the source code. A system using object composition may be very dynamic in nature so it may require running the system to get a deeper understanding of how the different objects cooperate.

[....]

However, inheritance is still necessary. You cannot always get all the necessary functionality by assembling existing components.

[....]

The disadvantage of class inheritance is that the subclass becomes dependent on the parent class implementation. This makes it harder to reuse the subclass, especially if part of the inherited implementation is no longer desirable. ... One way around this problem is to only inherit from abstract classes.

This tells me that the practice of inheriting from one concrete class to create a new concrete class, of overusing inheritance to create deep class hierarchies, is a totally bad idea. This is why in MY framework I don't do this and only ever inherit from an abstract class. While inheritance itself is a good technique for sharing code among subclasses, the use of an abstract class opens up the possibility of being able to use the Template Method Pattern which is described in the The Gang of Four book as follows:

Template methods are a fundamental technique for code reuse. They are particularly important in class libraries because they are the means for factoring out common behaviour.

Template methods lead to an inverted control structure that's sometimes referred to as the Hollywood Principle, that is, "Don't call us, we'll call you". This refers to how a parent class calls the operations of a subclass and not the other way around.

Not only do I *NOT* have any problems with inheritance, by using it wisely I not only have the benefits of sharing code contained in an abstract class I have the added benefit of increasing the amount of code I can reuse by implementing the Template Method Pattern for every method which a Controller calls on a Model.

If I am following the advice of experts and only inheriting from an abstract class and making extensive use of the Template Method Pattern, both of which provide a great deal of reusable code, and you are not, then how can you possible say that your practices are better?

Some clueless newbies claim that inheritance is now out of date and that I am not following the latest "best practices" by still using it. I dismissed these ridiculous claims in Your code uses Inheritance. Other ridiculous claims can be found at the following:

I read this statement in several places but immediately dismissed it as hogwash as there was no explanation as to how inheritance breaks encapsulation. The author obviously had the wrong end of the stick regarding either one or both of these terms. I eventually found this description in wikipedia:

The authors of Design Patterns discuss the tension between inheritance and encapsulation at length and state that in their experience, designers overuse inheritance. They claim that inheritance often breaks encapsulation, given that inheritance exposes a subclass to the details of its parent's implementation. As described by the yo-yo problem, overuse of inheritance and therefore encapsulation, can become too complicated and hard to debug.

This description immediately tells me that inheritance itself is not a problem, it is the overuse of inheritance, especially when it results in hierarchies which go down to many levels, which is the problem. The notion that "inheritance exposes a subclass to the details of its parent's implementation" also strikes me as being hogwash. If you have class "B" which inherits from class "A" then all those methods and properties defined in class "A" are automatically available in class "B". That is precisely how it is supposed to work, so where exactly is the problem? If you have any bugs in your code which use inheritance then I'm afraid that there must be bugs in your code and not the concept of inheritance itself.

This so-called "problem" also appears under the name Fragile Base Class which is described as follows:

The fragile base class problem is a fundamental architectural problem of object-oriented programming systems where base classes (superclasses) are considered "fragile" because seemingly safe modifications to a base class, when inherited by the derived classes, may cause the derived classes to malfunction. The programmer cannot determine whether a base class change is safe simply by examining in isolation the methods of the base class.

As has already been stated earlier, inheritance does not cause problems when used properly, which means don't inherit from concrete classes, don't create deep inheritance hierarchies, only inherit from abstract classes

.

A clueless newbie called TomB criticised my use of inheritance with the following statement:

Your abstract class has methods which are reusable only to classes which extend it. A proper object is reusable anywhere in the system by any other class. It's back to tight/loose coupling. Inheritance is always tight coupling.

I debunked his claim in this article.

The first time I came across the Composite Reuse Principle (CRP) several questions jumped into my mind:

I could see no answers to any of these questions, so I dismissed this principle as being unsubstantiated.

This is supposed to be a solution to the problem that Inheritance breaks encapsulation. As that problem does not exist in my framework I have no need for that solution. Object Composition would not give me anywhere near the same amount of reusable code with so little effort, and as one of the principle aims of OOP is to increase the amount of reusable code I fail to see why I should employ a method which does the exact opposite.

My main gripe about object composition is that it plays against one of the primary aims of OOP which is to decrease code maintenance by increasing code reuse. Directly related to this is the amount of code you have to write in order to reuse some other code - if you have to write lots of code in order to take an empty object and include its composite parts then how is this better than using inheritance which requires nothing but the single word extends?

Another advantage to inheritance is that inheriting from an abstract class enables you to use the Template Method Pattern which the Gang of Four describe as:

Template methods are a fundamental technique for code reuse. They are particularly important in class libraries because they are the means for factoring out common behaviour.

As this powerful pattern cannot be provided with object composition then, for me at least, object composition is a useless idea. This is discussed further in Composition is a Procedural Technique for Code Reuse.

Another reason to dismiss this so-called "principle" as being illogical is the fact that it proposes replacing inheritance with composition which, according to what has been written about OO theory elsewhere, are totally incompatible concepts and therefore incapable of being used to achieve the same result. Inheritance is supposed to be the result of identifying "IS-A" relationships while composition is supposed to be the result of identifying "HAS-A" relationships. I challenge anyone to show me an example where two objects can exist in both an IS-A and HAS-A relationship at the same time. The two concepts are not interchangeable, therefore you cannot substitute one for the other.

I have yet to see any sample code which demonstrates that something which can be done with inheritance can also be done with composition, so until someone provides proof that these two ideas are actually interchangeable I shall stick with the idea that works and consign the other to the dustbin.

When attempting to discover a meaningful definition of this concept which showed how it could be used to provide reusable code I came across the following statement in this wikipedia article:

In programming languages and type theory, polymorphism is the provision of a single interface to entities of different types or the use of a single symbol to represent multiple different types.

I did not find this description useful at all due to my confusion with the words interface and type. I was even more confused with the different flavours of polymorphism. Which flavors could I use in PHP? Which flavors should I use in PHP? Which flavour provided the most benefits? Instead of attempting to implement polymorphism according to a published definition (which I could not find) I just went ahead and implemented the concepts of encapsulation and inheritance to the best of my ability and hoped that opportunities for polymorphism would appear somewhere along the line.

It just happened that after building my first Page Controller which operated on a particular table class, where the name of that table class was hard-coded into that Controller, I wanted to use the same Controller on a different table class but without duplicating all that code. I quickly discovered a method whereby, instead of hard-coding the table's name I could inject the name of the table class by using a separate component script which then passes control to one of the standard Page Controllers which are provided by the framework, one for each Transaction Pattern.

Eventually I came across a less confusing description (I forget where) which simply defined polymorphism as:

Same interface, different implementation

Note that I take the word "interface" to mean method signature and not object interface.

This enabled me to expand it into the following:

The ability to substitute one class for another. This means that different classes may contain the same method signature, but the result which is returned by calling that method on a different object will be different as the code behind that method (the implementation) is different in each object.

This meant that, because of the architecture which I had designed, and the way in which I implemented it, I had stumbled across, by accident and not by design, an implementation of polymorphism which provided me with enormous amounts of reusable components. The mechanics of the implementation are as follows:

In this way I satisfy the "same interface" requirement by having the same methods available in every concrete table class via inheritance from an abstract table class.

I satisfy the "different implementation" requirement by having the constructor in each concrete table class load in the specifications of its associated database table, with various "hook" methods being available in every subclass to provide any custom processing.

This means that if I have 45 Page Controllers which can be used with any of my 400 table classes then I have 18,000 (yes, EIGHTEEN THOUSAND) opportunities for polymorphism. How many do you "experts" have?

Yet there are still some clueless newbies out there whose understanding of polymorphism is so warped that they have the audacity to criticise my implementation. If you take a look at What Polymorphism is not you will see false arguments such as:

Rubbish. Polymorphism does not require a parent/child relationship, only that multiple classes contain the same method signature. The same method can be defined manually instead of being inherited.

Rubbish. If I have 400 concrete table classes which inherit methods from the same abstract table class then those 400 classes are siblings which means that they are related by virtue of the fact that they all have the same parent. They all inherit and therefore share the same methods from that abstract class, and it is the sharing of method signatures which provides polymorphism.

Rubbish. Polymorphism does not require that the same method signature exists in multiple classes through inheritance, it only requires that it exists. This includes being defined manually instead of being inherited.

Many years ago I remember someone saying in a newsgroup posting that Object Oriented Programming (OOP) was not suitable for database applications. As "evidence" he pointed out the fact that relational databases were not object oriented, that it was not possible to store objects in a database, and that it required the intervention of an Object Relational Mapper (ORM) to deal with the differences between the software structure and the database structure. I disagreed totally with this opinion for the simple reason that I had been using OOP to build database applications for several years, and I had encountered no such problems. As far as I was concerned it was the blind following of OO theory which was the root cause of this problem. OO theory states that, in a database application, it is the design of the software which takes precedence and that the design of the database should be left until last as it is nothing more than "an implementation detail". You then end up with the situation that, after using two different design methodologies, you end up with two parts of your application which are supposed to communicate seamlessly with one another yet cannot because they are incompatible. This incompatibility is so common that it has been given the name Object-Relational Impedance Mismatch for which the only cure is to employ that abomination called as Object-Relational Mapper (ORM).

As far as I was concerned it was not that OOP itself was not suitable for writing database applications, it was his lack of understanding of how databases work coupled with his questionable method of implementing OO concepts in his software which was causing the problem. You have to understand how databases work before you can build software that works with a database. I had the distinct impression that all these "rules" for OOP were written by people who had never written large numbers of components in a database application therefore had no clue as to how to achieve the best result.

I was designing and building database applications for 20 years before I switched to an OO language, so I knew how to design databases by following the rules of data normalisation. I also learned, after attending a course on Jackson Structured Programming (JSP), that it was far better to design the database first, then design the software to fit that database structure, than it was to design the software by completely disregarding the database structure. This echoes the words of Eric S. Raymond, the author of The Cathedral and the Bazaar who put it like this:

Smart data structures and dumb code works a lot better than the other way around.

The incompatibilities between OO design and database design manifest themselves in the different ways they deal with such things as associations, aggregations and compositions which are described in UML modeling technique as follows:

How you identify and then deal with the classes which are affected by these relationships is then covered by the following:

In OO theory class hierarchies are the result of identifying "IS-A" relationships between different objects, such as "a CAR is-a VEHICLE", "a BEAGLE is-a DOG" and "a CUSTOMER is-a PERSON". This causes some developers to create separate classes for each of those types where the type to the left of "is-a" inherits from the type on the right. This is not how such relationships are expressed in a database, so it is not how I deal with it in my software. Each of these relationships has to be analysed more closely to identity the exact details.

Please refer to Using "IS-A" to identify class hierarchies and Confusion about the word "subtype" for more details on this topic.

Objects in the real world, as well as in a database, may either be stand-alone, or they have associations with other objects which then form part of larger compound/composite objects. In OO theory this is known as a "HAS-A" relationship where you identify that the compound object contains (or is comprised of) a number of associated objects. There are several flavours of association:

This is discussed in more detail in Using "HAS-A" to identify composite objects and Object Associations are EVIL.

In a previous blog post entitled In the world of OOP am I Hero or Heretic? there is a section labelled What are the benefits of OO Programming? which contains the following statement from a person who goes by the moniker lastcraft:

I find that OO is best as a long term investment. This falls into my manager's bad news (which I have shamelessly stolen from others at various times) when changing to OO...

1) Will OO make writing my program easier? No.

2) Will OO make my program run faster? No.

3) Will OO make my program shorter? No.

4) Will OO make my program cheaper? No.

The good news is that the answers are yes when you come to rewrite it!

If he really thinks that the benefits of OOP do not appear when first creating an application but only after you rewrite it then as far as I am concerned his approach to OOP is totally wrong. My own implementation has produced superior results with my very first attempt and not with any subsequent rewrite. Note that I have NEVER had to redesign and rewrite my code, all I have done is start from a solid foundation then enhance and expand it.

When the same terminology means different things to different people this leads to confusion and ambiguity. How can two people have a meaningful conversation if they are using the same words but are describing different things? Below are some examples of this confusion:

If you ask 10 different programmers what OOP really means you will get 10 different answers, and almost all of these will be so far off the mark I am truly amazed that their authors can get away with calling themselves "professional OO programmers". I have highlighted some of these goofy ideas in What OOP is NOT.

The only simple description I have ever found for OOP goes like this:

Object Oriented Programming is programming which is oriented around objects, thus taking advantage of Encapsulation, Inheritance and Polymorphism to increase code reuse and decrease code maintenance.

Note here that I am only describing those three features which differentiate an OO language from a Procedural language. Some people seem to think that OO has four parts, with the fourth being abstraction. I do not agree. If the language manual does not show the keyword(s) which implement one of these parts the that part cannot be a feature of the language, it is only a design concept.

Many other descriptions of the "requirements" of OOP refer to additions which were made at a later date to different OO languages. I do not regard these additions as being part of the founding principles, so I ignore them if I don't like them.

Anybody who takes the time to study my framework in detail, either by reading the copious amounts of documentation or by examining and running the sample application or the full framework which can be downloaded, should very quickly see that the amount of reusable code which is provided means that new user transactions can be created very quickly. More reusable code equates to higher levels of productivity, and methodologies which directly contribute to higher productivity should be regarded as being superior to those which don't.

When I am told that I should be following the same set of rules and practices as other "proper" OO programmers just be "be consistent" and not "rock the boat" I refuse as, in my humble opinion, the dogmatic adherence to a series of bad rules will do nothing but make me "consistently bad". Further thoughts on this topic can be found at:

There are some OO programmers out there who seem to think that it is forbidden to have your software components being aware of your database structure. Take the following as examples:

By following the "rules" of Object Oriented Design (OOD) they therefore produce an object structure which is totally out of sync with their database structure. Being "out of sync" produces a condition known as Object-Relational Impedance Mismatch. One proposed solution for this problem was the invention of an Object-Oriented Database (OODBMS), but their adoption and market share is minimal when compared with Relational Databases which have grown in sufficient power to lessen the need for a radically different approach. As a substitute the OO world has chosen to add in is an extra piece of software known as an Object-Relational Mapper (ORM).

In my humble opinion this is a train wreck of a solution, or as someone else put it The Vietnam of Computer Science. Instead of writing code to deal with the after effects of this train wreck my approach is to adopt a design methodology which prevents the train from being wrecked in the first place. This approach is known as Prevention is better than Cure. Instead of wasting my time using one methodology to design my database and a different methodology to design my software I have completely ditched the need for OOD and instead create a class structure which is a mirror image of my database structure, one class for each table. This means that there is no mis-match, therefore no need to include code which deals with that mis-match.

I do this by first designing and building my database, then creating a separate concrete class for each database table. Some people say that this would produce huge amounts of duplicated code, but they obviously have not understood the concept of sharing code through inheritance. My method of only inheriting from an abstract class also means that I can make extensive use of the Template Method Pattern which is yet another mechanism of reusing code. The full list of savings which I am able to achieve is discussed further in Having a separate class for each database table *IS* good OO.

Further criticisms of ORMs can be found at Object Relational Mappers are EVIL.

Do you remember Hungarian Notation? This was invented by a Microsoft programmer called Charles Simonyi, and was supposed to identify the kind of thing that a variable represented, such as "horizontal coordinates relative to the layout" and "horizontal coordinates relative to the window". Unfortunately he used the word type instead of kind, and this had a different meaning to those who later read his description, so they implemented it according to their understanding of what it meant instead of the author's understanding. Thus they used it to differentiate between data types such as strings, integers, decimal numbers, floating point numbers, dates, times, booleans, et cetera. The result was two types of Hungarian Notation - Apps Hungarian and Systems Hungarian. You can read a full description of this in Making Wrong Code Look Wrong by Joel Spolsky.

In wikipedia the word "type" is defined as:

Noun

A grouping based on shared characteristics

In the world of OOP which has classes and objects the word "type" is often used as a synonym for the word "class" which are used as follows:

In the Gang of Four book, which was not written with PHP in mind, it states the following:

Specifying Object Interfaces

Every operation declared by an object specifies the operation's name, the objects it takes as parameters, and the operation's return value. This is known as the operation's signature. The set of all signatures defined by an object's operations is called the interface to that object. An object's interface characterises the complete set of requests that can be sent to the object. Any request that matches a signature in the object's interface may be sent to the object.

A type is a name used to denote a particular interface. An object may have many types, and widely different objects can share a type. Part of an object's interface may be characterised by one type, and other parts by other types. Two objects of the same type need only share parts of their interfaces. Interfaces can contain other interfaces as subsets. We say that a type is a subtype of another if its interface contains the interface of its supertype. Often we speak of a subtype inheriting the interface of its supertype.

...

Class versus Interface Inheritance

It is important to understand the difference between and object's class and its type.

An object's class defines how the object is implemented. The class defines the object's internal state and the implementation of its operations. In contrast, and object's type only refers to its interface - the set of requests to which it can respond. An object can have many types, and objects of different classes can have the same type.

This is irrelevant in PHP as classes and objects do not have different types. This is what the PHP4 Manual said:

Classes are types, that is, they are blueprints for actual variables. You have to create a variable of the desired type with the new operator.

You cannot assign a "type" to a class or an object, and if you use the gettype() function after you have instantiated a class into an object the result will always be "object", so testing for an object's "type" is a waste of time. There are other functions you may use to identify the class from which an object was instantiated, such as get_class(), get_parent_class() and is_subclass_of(). Personally I have no use for any of these as every concrete class in my Business/Domain layer IS-A database table because they all inherit from the same abstract table class. This means that using the words "type", "supertype" and "subtype" has no special meaning in PHP. They are the same as "class", "superclass" and "subclass".

When controlling or dealing directly with real-world entities such as CUSTOMER, PRODUCT and ORDER it would be reasonable to assume that each of those entities requires its own class as each entity has an entirely different set of methods and variables. However, when writing a database application, such as an enterprise application, you are not communicating with any entities in the real world, you are only communicating with information about those entities in a database, and that information is held in entities called tables. It is also obvious to any programmer who has experience with working with databases that every table, regardless of its contents, is subject to exactly the same set of operations/methods, and these methods are Create, Read, Update and Delete (CRUD). It would be inefficient, as well as bad OOP, for each table class to redefine the same set of CRUD operations as this would be against one of the aims of OOP which is to create as much reusable software as possible. It would therefore be a good idea to define these common operations in a superclass so that they can be inherited by each subclass.

But how can this be done in OOP? The answer was provided in 1988 by Ralph E. Johnson & Brian Foote in their paper Designing Reusable Classes in which they described a technique known as programming-by-difference. This involves the creation of an abstract class to hold the similarities which can then be inherited by a group of concrete subclasses which identify the differences. This is supported by the following statement which, believe it or not, was given as an argument against my practice of creating a separate class for each database table:

The concept of a table is abstract. A given SQL is not, it's an object in the world.

In the RADICORE framework the abstract table class contains all the common table methods while each concrete table class provides its own values for the common table properties.

Note that inheriting from an abstract class is supposed to be the best way of using inheritance. It also opens up the possibility of using the Template Method Pattern in which the abstract class contains the invariant methods while each individual subclass can contain its own set of variable "hook" methods to override the standard processing.

It is therefore wrong to say that in a database application each physical table is a different "type" when in fact it is a different implementation of the same abstract type. Each concrete table class shares the methods which it inherits from the abstract table class and is therefore a subclass of the abstract superclass.

Far too many programmers jump to the wrong conclusion when they try dealing with the "IS-A" test to identify class hierarchies. When they encounter such statements as "A VolkswagenBeetle is-a Car, a Sportscar is-a Car" they automatically assume that "Car" is a type and "VolkswagenBeetle" and "Sportscar" are subtypes that then require separate subclasses which are related through inheritance.

In the article Polymorphism and Inheritance are Independent of Each Other I came across the following sample C++ code which follows this train of thought:

// C++ polymorphism through inheritance class Car { // declare signature as pure virtual function public virtual boolean start() = 0; } class VolkswagenBeetle : Car { public boolean start() { // implementation code } } class SportsCar : Car { public boolean start() { // implementation code } } // Invocation of polymorphism Car cars[] = { new VolkswagenBeetle(), new SportsCar() }; for( I = 0; I <; 2; i++) Cars[i].start();

In this example Cars is a type while VolkswagenBeetle and SportsCar are subtypes because they inherit from the Cars class. Note that each of these subtypes has it own class. Note also that while a subtype can add extra methods to those which are already defined in the supertype it cannot remove any of those methods. This may cause problems if a subtype inherits a method which it cannot use (refer to the circle-ellipse problem for an example).

The idea that each subtype requires its own class is an alien concept in a database. In the above example I would not have a separate table for each type of car, I would have a single table to hold the details for all cars. I would therefore have a single CAR class which would handle all the rows in that database table. I certainly would NOT have a separate class for each row.

This idea simply does not work in a database application as each physical table should have its own class, so if each subtype has its own subclass then each subtype should have its own table. In the real world there would be a single table called VEHICLE which could hold the details of any number of different vehicles, and this table would have separate properties/columns for manufacturer, model and vehicle_type, seat_count, door_count, et cetera. The columns for manufacturer, model and vehicle_type would be be foreign keys to other tables which contain the list of available options for each of those columns. The ability to add another option to each foreign key column would require no more effort than adding an entry to the respective foreign table. It certainly would NOT involve creating a new (sub)class as this would involve changing code to access this new class. When I see an OO programmer using the word "subtype" I invariably translate this to mean "foreign key".

In a database application each physical table is not a separate "type" which requires its own collection of "subtypes" - the concept of a database table can be described in an abstract class while each physical table is a concrete implementation of that abstract type. Consider the following:

It should therefore be obvious that both are different blueprints for what is essentially the same type of entity, so there should always be a one-to-one relationship between "table" and "class". Furthermore, each and every table in a database, regardless of its contents, is subject to exactly the same set of Create, Read, Update and Delete (CRUD) operations, which means that, by following the advice given in Designing Reusable Classes which was published in 1988 by Ralph E. Johnson & Brian Foote, those common operations should be placed in an abstract class so that they can be shared by every concrete table class using the mechanism of inheritance. This was blindingly obvious to me when it came time to write the OO code for my second database table, but that was because I had 20 years of previous experience of writing database applications and had not been exposed to the misleading thoughts of OO "experts" who had no such experience. Only people who have direct experience of designing and working with databases which have been properly normalised are qualified to formulate principles for creating database applications.

The only difference between one table and another is its structure (the columns), so the only difference between one table class and another should be the description of that structure. By using an abstract class I am able to implement the Template Method Pattern which in turn means that all standard processing is carried out by invariant methods which are inherited from the abstract class while any custom processing can be carried out by "hook" methods in each concrete subclass.

The word "state" has several meanings, among which are:

state (condition) - as in "The building was in a state of disrepair".

However, in computer science a system is described as stateful if it is designed to remember preceding events or user interactions; the remembered information is called the state of the system. This is in contrast to a stateless system which does not maintain any memory of its state between function calls.

In 2018 I came across an article called Objects should be constructed in one go in which Matthias Noback said:

When you create an object, it should be complete, consistent and valid in one go.

When I had the audacity to challenge this statement Matthias Noback, being a snowflake who cannot tolerate opinions which are different from his own, promptly deleted my response, which forced me to create my own article at Re: Objects should be constructed in one go. As far as I am concerned the true definition of a constructor is as follows:

The purpose of a constructor is to initialize an object into a sane state/condition so that it can accept subsequent calls on any of its public methods.

Notice here that the word state means condition. It does NOT mean that all the object's properties must be loaded with pre-validated data. It is perfectly acceptable to load and validate data using separate public methods.

Matthias Noback's opinion is in total contrast to that of Yegor Bugayenko who wrote an article called Constructors Must Be Code-Free. It is simply not possible for both of these opinions to be right, and as they both disagree with the true definition of a constructor I consider them both to be completely wrong and not worth the value of the toilet paper on which they are written.

Before I became involved in OOP I understood the word "interface" to be shorthand for Application Programming Interface (API) which has the following definition:

An application programming interface (API) is a connection between computers or between computer programs. It is a type of software interface, offering a service to other pieces of software. A document or standard that describes how to build or use such a connection or interface is called an API specification. A computer system that meets this standard is said to implement or expose an API. The term API may refer either to the specification or to the implementation.

[....]

One purpose of APIs is to hide the internal details of how a system works, exposing only those parts a programmer will find useful and keeping them consistent even if the internal details later change. An API may be custom-built for a particular pair of systems, or it may be a shared standard allowing interoperability among many systems.

All the API documentation which I read contained a list of function calls (sometimes called function or method signatures) which identified the function name and its input and output arguments, plus a description of what the function actually did. The code behind the function, the implementation, was never revealed.

Later, while reading articles on OOP, I came across some confusing references to the term "interface" which had a different meaning. I did not understand this meaning as PHP4 did not support this idea. I later discovered this meaning when PHP5 was released. I looked at how much additional code was required to implement this feature, then I looked for the benefits. Guess what? There ARE no benefits, just costs. All my existing code, which calls method signatures inside concrete classes, would not work any differently or faster by creating abstract classes using the word interface then modifying the concrete class to use the word implements. No matter how many articles I read which said that these new-fangled interfaces were the best thing since sliced bread I just could not see the point. As I already made extensive use of an abstract class in my framework I could see no need for abstract interfaces.

Those programmers who are aware of the history of OOP should know that interfaces were only added to early statically typed languages to get around the problem of not being able to provide polymorphism without the use of inheritance. PHP, being dynamically typed, does not have this problem and can provide polymorphism without the use of either inheritance or interfaces. This means that interfaces are the solution to a problem which does not exist in PHP, which makes them totally redundant and a violation of the YAGNI principle. This topic is discussed in Object Interfaces are EVIL.

I later came across the principle of program to the interface, not the implementation which confused me even more. If I am writing an application which has been divided into multiple smaller modules instead of a single huge block of monolithic code then I am automatically calling each module by its method signature without being concerned about that method's implementation. I know what the method does (or is supposed to do) but I do not know or even care about how it does it.

If this is the way that all modular software has behaved since computers were invented, then why is it expressed as a new rule just for OOP?

I often read in various articles, blog posts or newsgroup comments about how certain practices allow programmers to write code which is "decoupled" as against "coupled", where the latter is supposed to be a bad thing. This is a typical example:

Dependency injection is a programming technique that makes a class independent of its dependencies. It achieves that by decoupling the usage of an object from its creation.

As far as I am concerned the term "coupling" has absolutely nothing to do with separating the place where an object is instantiated from where a method on that object is called. It is only concerned with how two modules interact because of a call from one module to another, in which case the first module is dependent on the second module. A dependency between two objects only exists if object "A" contains a call to a method in object "B". This means that object "A" is dependent on the contents of object "B" in order to carry out its processing. It would be wrong to say that object "B" is dependent on object "A" simply because there is no call from object "B" to object "A".

Some people seem to think that coupling can be eliminated by "decoupling", which involves replacing a call from Object "A" to object "B" with an intermediate call through object "X" which then calls object "B". Because there is no direct call from "A" to "B" then "A" and "B" are not coupled, right? WRONG! Object "A" is still dependent on object "B" because it requires the services of object "X" which in turn requires the services of object "B". The coupling may be indirect instead of direct, but it is still there. All you have done is replace one method call with two, which doubles the number of places which may have to be updated due to the ripple effect caused by tight coupling. I have written more on this topic in Decoupling is delusional.

It is also wrong, in my humble opinion, to state that inheritance produces tight coupling between the superclass and the subclass. When the subclass in instantiated into an object the result is a single object which combines the methods and properties from both classes - it does not result in two objects with method calls from one to the other. A superclass and a subclass are not "coupled" because of inheritance, they are "combined" into a single object. Because the result is a single object and not two objects there is no call from one object to the other, therefore there is no "coupling". The fact that when a method is called on an object the signature of the method being called may be taken from either the subclass or the superclass cannot be classed as a problem which is to be avoided as that is the very nature of inheritance, the way that code is being shared.

Two objects are said to be "coupled" because one of them calls a method signature that exists in the other. They cannot become decoupled unless that method call, and therefore the dependency, is removed. Whether two objects are coupled or not, either directly or indirectly, is therefore a binary condition - it is either TRUE or FALSE, they are either COUPLED or NOT COUPLED. The fact that with Dependency Injection the identity of the dependent (called) object can be swapped at runtime does not remove the dependency between those two objects or remove the coupling as the call from object "A" to object "B" still exists.

In OOP when two objects are coupled it is considered to be good practice to aim for loose coupling instead of tight coupling so as to avoid the ripple effect where a change to a method signature in one object requires a change in all the places where that method signature is called. This means that certain statements are wrong, such as this one which I found at Decoupling Patterns:

A powerful tool we have for making change easier is decoupling. When we say two pieces of code are "decoupled", we mean a change in one usually doesn't require a change in the other.

Instead of "decoupling" two pieces of code what the author is actually describing sounds more like the "separation" of two pieces of code so that they do not exist in the same module. This practice already has a proper name, and that is either the Single Responsibility Principle (SRP) or Separation of Concerns (SoC). He is using the wrong terminology, which shows a lack of education on his part.

In The Importance Of Decoupling In Software Development I found this definition of decoupling:

Decoupling is isolating the code that performs a specific task from the code that performs another task.

This again is an example of using the wrong terminology. The correct term in this situation is not "isolating" or "decoupling", it is "separating", as mentioned previously in the Single Responsibility Principle (SRP) or Separation of Concerns (SoC).

Later on in the same article it says:

A common design pattern for solving problems like these is called Inversion of Control (IOC). IOC is a software architecture pattern where control flow goes against traditional methods. Instead of having modules dependent on each other, IOC works by having modules depend on an intermediary module with abstracted services. This intermediary module seamlessly manages all operations and allows you to switch between different service providers.

Here he is mixing up the terminology so much that all he is doing is creating buzzword soup.

switch between different service providersis known as Dependency Injection where the identity of the dependent object is not known until run time. A dependent object is still called, therefore there is coupling, but it is wrong to say that control is inverted as the call between the two modules remains the same and does not change direction.

This is yet another case where a single word has different and unrelated meanings:

Classes should favor polymorphic behavior and code reuse by containing instances of other classes that implement the desired functionality

When I looked for an example which proved the superiority of composition over inheritance I found the following code in Inheritance vs. Composition in PHP:

class Vehicle {

public function move() {

// ...

}

}

class Car extends Vehicle {

public $engine;

public function __construct(Engine $engine) {

$this->engine = $engine;

}

public function move() {

$this->engine->start();

// ...

}

}

class Engine {

public function start() {

// ...

}

}

$car = new Car(new Engine());

$car->move();

This to me is total nonsense as methods like $engine->start and $car->move simply will never appear in a database application in which you are NOT communicating with objects in the real world as all you are doing is maintaining information about those objects in a database. The objects in a database are called "tables", and the only operations which can be performed on a table are Create, Read, Update and Delete (CRUD). Because of this I will use examples from my own code, as shown below:

class default_table {

public function insertRecord($fieldarray) {

// ...

return $fieldarray;

}

public function updateRecord($fieldarray) {

// ...

return $fieldarray;

}

public function deleteRecord($fieldarray) {

// ...

return $fieldarray;

}

public function getData($where) {

// ...

return $fieldarray;

}

}

class product {

public function __construct (

$this->default_table = new default_table;

}

public function insertRecord($fieldarray) {

$fieldarray = $this->default_table->insertRecord($fieldarray);

return $fieldarray;

}

public function updateRecord($fieldarray) {

$fieldarray = $this->default_table->updateRecord($fieldarray);

return $fieldarray;

}

public function deleteRecord($fieldarray) {

$fieldarray = $this->default_table->deleteRecord($fieldarray);

return $fieldarray;

}

public function getData($where) {

$fieldarray = $this->default_table->getData($where);

return $fieldarray;

}

}

As you can see this still requires a great deal of code in the product class as each method in the default_table class requires a corresponding method in the product class in order to call it. Now look at how much code we can remove yet still produce exactly the same effect. Note the use of the keywords abstract and extends:

abstract class default_table { public function insertRecord($fieldarray) { // ... return $fieldarray; } public function updateRecord($fieldarray) { // ... return $fieldarray; } public function deleteRecord($fieldarray) { // ... return $fieldarray; } public function getData($where) { // ... return $fieldarray; } } class product extends default_table{ public function __construct ( // ... } }

The actual contents of my abstract table class are listed in more detail at common table methods.

Notice that this method requires nothing in the concrete subclass except the constructor, so that's a huge amount of code that I don't have to write.

Note also that there is a significant loss of functionality when replacing inheritance with composition - the inability to use the Template Method Pattern and its "hook" methods. This pattern implements Inversion of Control (IoC) which is also known as the Hollywood Principle (Don't call us, we'll call you) which is a distinguishing factor of all frameworks. Without it the RADICORE framework would not be much of a framework, so on that fact alone object composition is not a viable alternative.

A composition relationship is where one object (often called the constituted object, or part/constituent/member object) "belongs to" (is part or member of) another object (called the composite type), and behaves according to the rules of ownership.

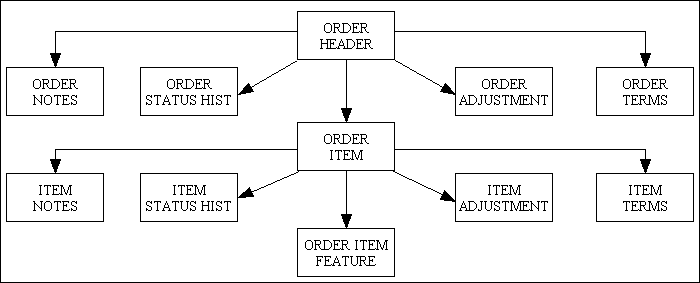

As is usual true OO afficionados like to make things more complicated than they really are by inventing several types of composition, but in the context of database applications I have seen only two possibilities. Figure 2 shows the idea of a single object known as an ORDER which, following the rules of data normalisation, is broken down into a group of tables which are related in such a way as to form a fixed hierarchy. In the database this is not a single object, it is a group of tables which are joined together in a series of one-to-many or parent-child relationships.

Figure 2 - an aggregate ORDER object (a fixed hierarchy)

This is explained in more detail in Object Associations are EVIL - Figure 5.

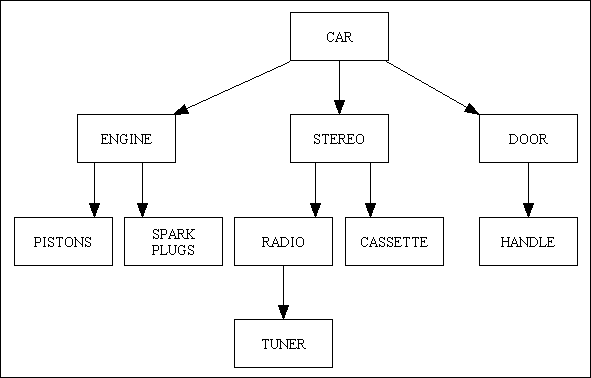

Figure 3 shows what looks like a similar structure, but it is completely different in that each of those entities - Car, Engine, Piston, Spark Plugs, Stereo, Door, et cetera - are NOT separate tables in the database, they are separate rows in a single PRODUCT table. The relationship between one product and another is maintained on a separate PRODUCT-COMPONENT table, as shown in Figure 4.

Figure 3 - an aggregate BILL-OF-MATERIALS (BOM) object (an OO view)

The idea that each of these objects is regarded as a separate entity, thereby requiring a separate class, is bonkers. They are simply separate rows in the PRODUCT table, therefore can be handled by a single PRODUCT class.

This is explained in more detail in Object Associations are EVIL - Figure 6.

Figure 4 - an aggregate BILL-OF-MATERIALS (BOM) object (a database view)

This structure shows two one-to-many relationships where the PRODUCT-COMPONENT table has two foreign keys - product_id_snr and product_id_jnr, which both point back to different entries on the PRODUCT table. The primary key of the PRODUCT table is a combination of these two foreign keys. The maintenance tasks for the PRODUCT table and PRODUCT-COMPONENT table are totally separate. The complete BOM in its entirety can be viewed using a task built from one of the TREE VIEW patterns.

This is explained in more detail in Object Associations are EVIL - Figure 7.

I have seen far too many instances where someone with experience says "do not overuse/misuse/abuse X" where "X" can be almost anything, and this is immediately translated into "do not use X" by those who do not understand the difference in meaning. Here are some examples:

Why not? What are the problems with global variables? Are these "problems" down to fault with the concept or their implementation? Please refer to Your code uses Global Variables for a more detailed discussion.

Why not? Singletons have been among the list of well known design patterns for over a decade, so what can possibly be wrong with them? I have read many blog and newsgroup posts which echo this rule, but few provide any sort of proof that the rule has any substance. When I eventually found a list of reasons I studied them and came to the conclusion that it is not the idea of singletons which is at fault, it is their choice of implementation. Everybody follows the same idea that each class must contain a getInstance() method which forces every instance of that class to be a singleton whether you like it or not.

I use a totally different approach. I have a single static getInstance() function within a standalone singleton class. This means that I can obtain an instance of any class using either of these two lines of code:

$object = new classname;

$object = singleton::getinstance('classname');

Please refer to Singletons are NOT evil for more details.

Why not? What are the problems with inheritance? What is the alternative? How is it better? If you read What is the meaning of Inheritance? you will see that any so-called "problems" are down entirely to the overuse of inheritance and not the concept itself. The solution to these problems is to follow these steps:

This rule is a complete non-starter for me as without inheritance I would not be able to use the Template Method Pattern which is a fundamental technique for code reuse and which plays a major role in my framework.

Although these three words are different they mean virtually the same thing: